ThoughtSpot acquires Mode to define the next generation of collaborative BI >>Learn More

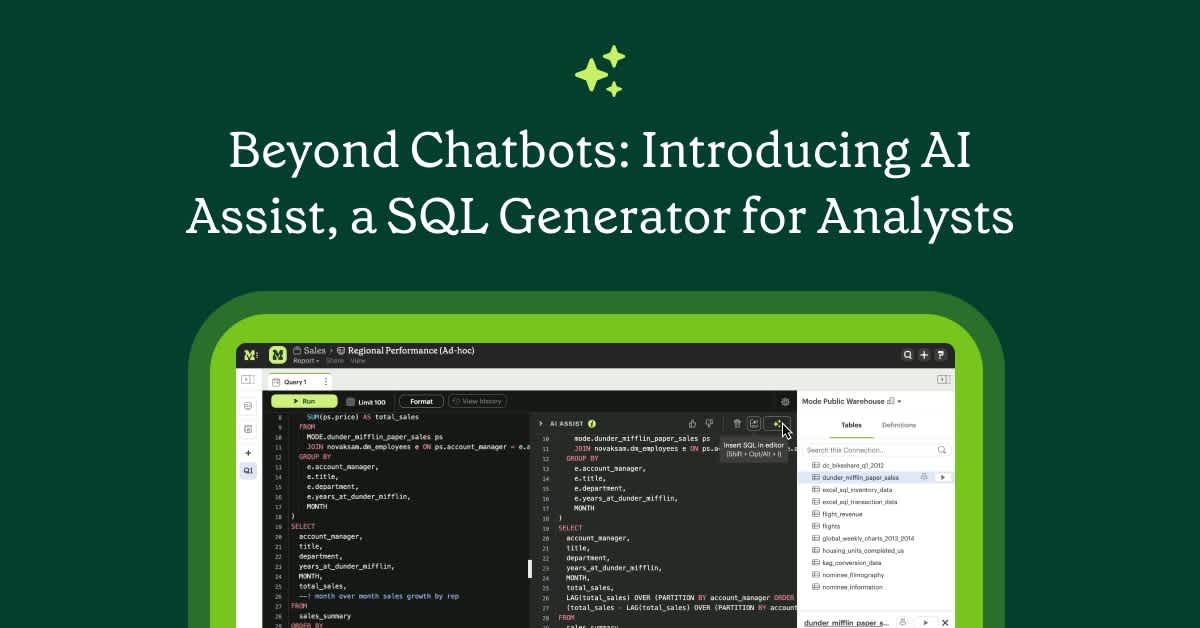

Beyond Chatbots: Introducing AI Assist, a SQL Generator for Analysts

Introducing AI Assist that helps you quickly map out a query structure before diving into the details. Take a look at how it will assist analysts make faster decisions with the power of AI.